Glossary Term

Interpretability

The degree to which a human can derive meaning from the entire model and its components without the aid of additional methods.

Related terms: Explainability

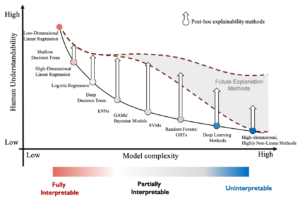

A fully interpretable model is one that has simulatability (the entire model can be contemplated at once), decomposability (each component of the model are human-understandable), and algorithmic transparency (one can understand how the model was trained) (Lipton 2016) while a partially interpretable model may only meet one of these criteria. As an example, low-dimensional linear regression is fully interpretable and a shallow decision tree is partially interpretable (see figure below).

Fig. 1 Illustration of the relationship between understandability and model complexity. Fully interpretable models have high understandability (with little to gain from explainability) while partially interpretable or simpler black box models have the most to gain from explainability methods. With increased dimensionality and non-linearity, explainability methods can improve understanding, but there is considerable uncertainty about the ability of future explanation methods to improve the understandability of high-dimensional, highly non-linear methods.