Day 1 Discussion

On day 1, we learned about the Severe prediction problem, became more familiar with the data, trained machine learning models for the problem, and performed some initial evaluations, and started investigating XAI.

Please have one of your team members reply in the comments to to each of these questions after discussing them with the team.

See the Jupyter notebooks for this topic.

Discussion prompts

- The lectures today and introduction to the Trust-a-thon both emphasized the importance of thinking about trust and the end user while developing AI. In this reflection, describe how your group thinks about both the trustworthiness of AI and how the end user fits into the AI development process. Then discuss how this thinking came into play for your first day of work on the Trust-a-thon: How did you integrate your user and/or their needs today? How would the day’s work have gone if you didn’t have an end user or had been assigned a different one?

- Finally, the lectures also covered interdisciplinary collaboration, with this in mind take some time to think about how you worked together as a team: What went well and what could have been better? Did you integrate all team members? How will you keep doing things well the rest of the week and what is your plan for improving the areas that could have gone better?

Team #37:

1. Describe how your group thinks about both the trustworthiness of AI and how the end user fits into the AI development process.

Honestly we didn’t, it was more of an implicit concern in being correct, or getting to the greatest possible accuracy with the end user being delivered the best product.

2. How did you integrate your user and/or their needs today?

On day one, this didn’t really happen. We were concerned with setting up the notebook, getting into the assignment.

3. How would the day’s work have gone if you didn’t have an end user or had been assigned a different one?

A different end-user farther away from the subject matter would have led to more consideration of the use case and being able to explain every aspect of the model, choices made and error. This led us to realize that there is the possibility for a certain level of sloppiness when producing results with end users that are close peers.

4. How you worked together as a team: What went well and what could have been better?

Our team was totally MIA and only Huang and I were actively meeting working on things and talking after lectures. We only put it together after day 2 because I was hoping that it would have more active chats/organizing from within the team, but this didn’t happen so we were a team of 2. A good team though! I would recommend that in organizing participants for the trust-a-thon that there is an expectation for communication, being present for video chats and more interaction on slack from team members. Would have liked to see what people were doing.

5. Did you integrate all team members?

We really tried!

6. How will you keep doing things well the rest of the week and what is your plan for improving the areas that could have gone better?

We set up daily post-lecture reviews, went over prompts and notebooks, shared related research resources and ideas. It could have gone better if we had started on Day 1 and had more team members join us.

1. The trustworthiness of AI is linked to the concepts of explainability and interpretability as was brought out in the lectures of Day1. These concepts help in communicating the usability of AI models to end users and therefore lead to the early adoption of AI techniques in the fields of Atmospheric and Ocean sciences. I would say that AI models are at the same junction of acceptability as numerical weather prediction models were about 15 – 20 years back. Forecasters would largely be dependent on Synoptic methods for forecasting and were skeptical of relying on the NWP models. However, the scenario changed progressively when the NWP models were found to be viable and provided valuable inputs to the forecasters. The primary reasons, I feel, were both due to the forecasters realizing the value that NWP models provided and the maturity that the NWP models gained due to consistent improvements over the course of time. The user’s perspective therefore is critical in bridging about an early adoption of AI. The way this perspective can be developed is through consistent communication to understand the requirement, feedback of the model performance from the user and being honest about the shortcomings of the model. The user requirement may be divergent as in the Emergency Manager of a Rural area would be more interested in the occurrence of severe lighting phenomena within a large geographical area but he may not be too much bothered about the precise direction of movement of the lighting within a short span of time or the precise location of occurrence. However, to the contrary the Director of a sports stadium may be interested in the precise distribution of the temporal and spatial occurrences of lighting and also its movement. Therefore, his requirement is different to that of the Emergency manager and hence the solutions employed also needs to be different. An AI model which meets the expectation of the Emergency Manager will not do the same for the Director of the sports stadium.

2. Interdisciplinary approach is the optimal way forward in the field of AI for Geosciences as the community will engage in complex problems of Global nature which it is foreseen will progressively increase in complexity. Our team exchanged ideas on the slack which helped in all of us enriching our knowledge and crystallize our thoughts.

Team 17

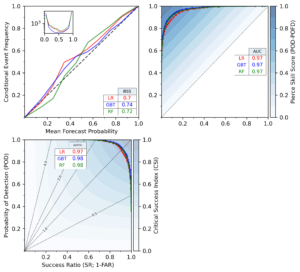

1. Trustworthiness of AI is an important issue in promoting the use of AI because it is a rather new concept, and many people see it as a blackbox (as we saw in earlier slido responses). Some people only believe in physical models, by addressing trustworthiness of AI, these people may understand what the model is doing and be willing to use it. End users are important in the AI development process because our target is to accommodate their needs using AI models. By integrating the user in the process, we can be more aware of the specific target location/purpose of the model. If we didn’t have an end user, maybe we could only develop a very general model. We selected the emergency manager as the end user in our discussion. The trustworthiness of AI for emergency manager requires high probability of detection and low probability for false alarm because they have limited teams and resources to fight against the fires. The emergency manager should quickly react to the lightning events, so making model simple, efficient and effective is necessary. Identifying the important features to detect the lightning strikes from satellite figures is vital. Besides, from climatology-wise perspectives, if the AI model can learn the locations of lightning strikes from satellite figures in the past, one can map the hotspot of lightning. The emergency manager could take actions in advance to prevent severe fires, such as building fire line in the forests.

2. We were mostly working on our own notebooks and getting familiar with the environment so we didn’t work as a team a lot. But we were able to group our thoughts and write the blogpost. Hopefully we can help each other out if we have questions in the rest of the week and collaborate.

Question 1

Considering the Trustworthiness of the developed AI model and the end-user, it is essential to test the model. Once the model is efficiently trained, there is a need to subject the model to thorough testing using different datasets obtained from different periods and cross-check with reality to establish the model performance. Besides, a close collaboration with the user is pivotal as they can offer essential information based on their experiences which can help in the model verification. Specifically, for the SEVIR users, the accuracy of spatial distribution prediction seems more critical since the false prediction would lead to severe consequences and waste of efforts and resources. If we did not know the context of the model beforehand, we would have tended to think that it was feasible to simplify the data set a bit more to make the problem more tractable, for example, in the case of an introductory research case study. However, by knowing the model’s objectives, the interpretation of the results and the analysis of the model can be better supported by the context.

Question 2

Besides working on the notebooks, we had the opportunity to discuss some of the features within the notebooks, like the Taylor diagram. Also, we had an exciting discussion on the Trustworthiness of AI models and the end-user. The collaboration was good, and the different perspectives and thoughts raised helped develop the responses to the questions.

Fantastic answer to question 1! This is a really excellent, user-centered approach that will lead to trustworthy AI. This level of engagement and collaboration with the end user is wonderful to see! Great job thinking very deeply about your user for your specific problem. This level of thought and engagement will help make your AI useful AND used!

Keep up the great work!