Day 2 Discussion

On day 2, we learned more about explainability versus interpretability and about deep learning XAI Methods.

Please have one of your team members reply in the comments to to each of these questions after discussing them with the team.

See the Jupyter notebooks for this topic.

Discussion prompts

- Today we introduced the topics of AI explainability and interpretability. Building on what you learned in both sessions today, what do you think the goals of explainability and interpretability are (or should be) when working with your end user? In other words, why do (or should) these terms matter for end users?

- How can you use the techniques covered today (either in the lecture or in your trust-a-thon activity) to better meet the needs of your end users?

Question 1

From today’s discussions and presentations, it emerged that even though the terms explainability and interpretability might mean the same thing to most people, in AI, they are two different things that are key to the development of AI. We learned that interpretability refers to the level at which users can understand the model and its component without using other methods, while Explainability refers to the extent to which humans/users can decipher the model and its components using post-hoc methods. The explainability and interpretability to me mean the bridge between the technical process itself and the specific question an ML model aims to handle, where the end-users can convince themselves when analyzing the results and gain trust in the ML model. Besides, the two terms enable comparison and evaluation between ML results and conventional numerical/statistical methods, which is necessary for those who start working with AI/ML techniques. The end-user needs a model that can predict flashes of lightning likely to cause a wildfire. It is important to note that not all lightning strikes can lead to wildfire as it depends on other factors such as season, vegetation density, and type in the wildland. To build a trustworthy AI model, it is essential to ensure the model’s interpretability and explainability can dissect this relationship; the developer needs to understand the end-user’s decision-making process or strategy, which is a crucial input feature for prediction. When developing any AI model, the goal is to ensure that the model serves its purpose. Factoring in the end-user and their level of understanding is critical. For example, in designing an AI model that can predict lightning that can cause wildfires, ensuring the model is easy to understand and well-calibrated is essential. The end-user, who is an Emergency Manager, should be able to understand the model and its components. Besides, the end-user should find it easy to decode the model results to act in good time.

Question 2

The presentations began with having a feel of how the end-user understands explainability and interpretability. We find this very useful when developing AI models, especially when you know what the user expects or how they operate; it helps align the final product in that direction. Also, we learned how to apply different methods to cross-check and enhance the confidence in applying the developed model. Things like attribution maps are fundamental in gaining a better understanding of strategies used in neural networks. These helps train and calibrate the model, improve forecast accuracy, and minimize errors. Knowledge gained will also play a key role in interpreting attribution maps which we saw today can significantly improve model performance.

Question 1:

We took the Emergency Manager in a Rural Area as an example. We found that the sub-SEVIR image dataset was used as a tabular time-series dataset in the case study, which means the dataset could not make prediction maps of lightning strikes and cannot meet the requirements of the Emergency manager. Therefore, we conducted our discussion based on an ideal situation.

Key user needs about the models as an Emergency Manager in a Rural Area are more related to a regression task that predicts the intensity of lightning strikes that occurred at a pixel at some time point: 1) spatiotemporal resolved prediction maps of lightning strikes. 2) uncertainty of the predictions (should we take the predictions as the main reference?). 3) how do the models reach the decisions (are the models consistent with managers’ experience and domain knowledge?).

I.e., because the emergency manager needs to respond to the emergency quickly, it is necessary to generate good visualization which could be understandable in the first eye to locate lightning strikes. One also can display the number of flashes with colors to clearly map the most flashes area and some moderate flashes area, e.g. red for severe, orange for moderate, yellow for light, and white for none. If the location in red matches forest regions with plenty of fuels, that area would be highly alarmed. In this way, the manager can dispatch the teams and resources to the most severe damage area as soon as possible. In addition, the emergency manager would care about the model performance and attribution. Verification from different machine learning methods or attribution of different inputs can help them to know more pieces of clues about the mechanisms of how the models make decisions and determine to what extent the models are doing a scientifically correct thing thus we can rely on the AI model. In addition, the explanations could remind them to be cautious in some specific scenarios (e.g. high water vapor, the location of storm core column).

Question 2:

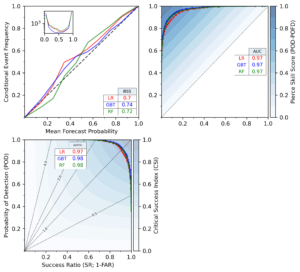

The permutation importance demonstrates which factors are the most important to influence the model’s overall performance. If the ranking of the features is meteorologically reasonable, then the emergency manager could have more trust in the model. Otherwise, we need to work with the manager to seek other reasons like data distribution.

The Accumulated local effects plots represent the sensitivity of predictions to a specific feature. The emergency managers can compare the patterns shown by the ALE plots with their domain knowledge to evaluate the reliability of the regression model.

Shapley values may help the emergency manager understand how the features influence flashes (e.g. high water vapor, the location of the storm core column). They could be precautious in these situations.