Day 4 Discussion

On day 4, we learned about quantifying and communicating uncertainty. Today we will consider how to quantify uncertainty and communicate it for the Space problem and reflect on what we have learned throughout the week.

Please have one of your team members reply in the comments to to each of these questions after discussing them with the team. If you have not commented on the posts from the previous days, please add your thoughts there as well.

Here are the Space Weather Jupyter notebooks:

- More beginner oriented: https://github.com/ai2es/tai4es-trustathon-2022/blob/main/space/magnet_lstm_tutorial.ipynb

- More advanced user oriented: https://github.com/ai2es/tai4es-trustathon-2022/blob/main/space/magnet_cnn_tutorial.ipynb

The TAI4ES Space GitHub Readme page is here.

Discussion prompts

- Building on what we covered in the lecture today, how would you quantify uncertainty for your model? And how would you communicate that uncertainty to your end user?

- We’ve covered a lot in the summer school, take some time to reflect on what stood out to you and what really resonated. Take some time to think about what you all learned, what you still want to learn, and where you’re going from here.

Notes team 2 Day 4 06/30/2022

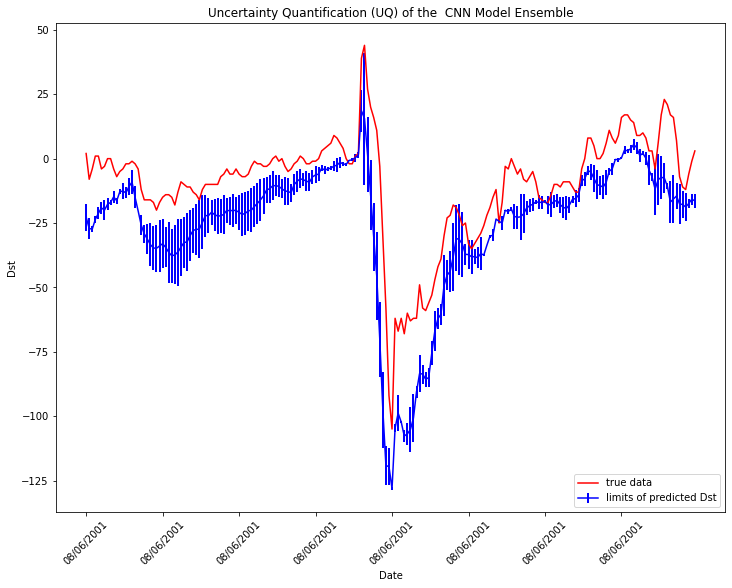

1.1 Building on what we covered in the lecture today, how would you quantify uncertainty for your model?

Answer:

-Choose common loss functions to Quantile regression or CRPS loss function

-Taking ensemble models with multiple loss functions using optimization algorithms like Adaboost can give a range of the uncertainty

-Instead of having NN output a single prediction, output a probability distribution (Parametric prediction) which can be thresholded based on the user’s requirements to enhance trustworthiness.

-Use the diversity of various models trained on slightly different data/synthetic data/engineered features/initializations (Deep ensembles)

-Dropout appropriate % of neurons to prevent overfitting (Monte Carlo dropout)

-Use Bayesian neural network where we have probabilistic weights, biases, and activation functions

– Generate evaluation plots: reliability curve and attribute diagram, spread-skill plot, probability integral transform diagram, discart test, the continuous ranked probability score for holistic measurement of uncertainty

1.2 And how would you communicate that uncertainty to your end user?

Answer:

-Understanding the risk where human value is at stake and where the outcome is uncertain.

– Share relevant evaluation metrics for uncertainty computation that are acceptable in the domain of the end user (maybe some users are focused on the precision of the model; some want to improvise the model by knowing about recall)

-We, as “whom” assessing the uncertainty, should communicate with our end user about four questions “what”, “in what form”, “to whom” and “to what effect”. “What” includes scientific models, biases, direct uncertainties, the magnitude of the uncertainties;

“in what form” includes use percent chance to be more clear, make comparisons; “to whom” includes numeracy, graphicacy, mental models;

“To what effect” should incorporate the interest of the end users e.g. precision results to predict geomagnetic storms can have a precise application to ensure proper magnetic navigation of underwater submarines

-Plot a few uncertainty figures to communicate uncertainty visually

2. We’ve covered a lot in the summer school, take some time to reflect on what stood out to you and what really resonated. Take some time to think about what you all learned, what you still want to learn, and where you’re going from here.

Answer:

What we all learned:

-We were amazed how the summer school teaches us how to develop AI model from the end-users perspective. In order to address uncertainties, instead of using commonly-used mean squared error (MSE), we could change to QR and CRPS

– The summer school has taught us a lot about the importance of reproducibility, explainability and interpretability of machine learning models. We learnt how important it is to work together in a STEM team with the end user. We also enjoyed learning more about the different XAI methods.

– We were exposed to the importance of developing relevant case studies that satisfy the requirements of end users. The importance of data bias along with model bias was a major takeaway.

– The interdisciplinary collaboration among our team from Atmospheric Sciences, Computer Science, Geophysics and Hydrology was amazing and we learnt a lot to unify our perspectives in this project.

What we still want to learn:

– It would be nice to learn a collaborative approach for making the models having a general set of specifications to address the needs of all multiple users before specific add-ons to make the model development generalizable, in-expensive and scalable.

– Exploring Multimodal Machine learning with text, image and audio to communicate the explanation of the models clearly could be an interesting direction of study

– Exploring Multi-agent Reinforcement Learning to make precise geomagnetic predictions and protect assets like underwater magnetic instruments from solar storm by defenders like NASA and NOAA can inspire trustworthiness of the AI models. These models are reliant on human nature to collaborate and compete which are more advanced than memorization models like LSTM or CNN.

– It would be cool to get a primer on the specific domain like space studies to understand the terms used to interpret the machine learning models

-Even though we learned there are many methods could be implemented to develop an XAI, we could want to know how to implement these methods hands-on with programming, instead of just theory and execution of existing code

Where we’re going from here:

-We’d go though the notebook and examples used in the lecture to know how to implement XAI

-We’d be using Jupyter Hub to run the notebooks with new adaptations.

-We have learned a lot during this week, including that there is so much more to learn. The lecture recordings, slide presentations, Trust-A-Thon notebooks and provided materials are great resources to keep learning and exploring.

-We would be interested in future collaboration with the team leaders in space.

-We plan to incorporate these trustworthiness in AI techniques in our future research work

– We would like to know about more opportunities in the area of Trustworthy AI

-We would like to sincerely thank our team leaders including Christopher, Douglas, Rob, LiYin, Manoj and everyone in the AI2ES team for preparing all the resources and guiding us this week.