Day 4 Discussion

On day 4, we learned about quantifying and communicating uncertainty. In the trust-a-thon session today, we want you to investigate uncertainties in the tropical problem and reflect on what you have learned throughout the week.

Please have one of your team members reply in the comments to to each of these questions after discussing them with the team. If you have not commented on the posts from the previous days, please add your thoughts there as well.

Here is the TAI4ES Tropical notebook for reference.

Discussion prompts

- Building on what we covered in the lecture today, how would you quantify uncertainty for your model? And how would you communicate that uncertainty to your end user?

- We’ve covered a lot in the summer school, take some time to reflect on what stood out to you and what really resonated. Take some time to think about what you all learned, what you still want to learn, and where you’re going from here.

1. Four methods were introduced to quantify uncertainty, including Attributes diagram, Spread-skill plot, PIT histogram, and Discard test. Given how abstract the methods are to most users, we should visualize the uncertainties and explain what each element on the plots mean.

2. AI models are more explainable and trustworthy than previously thought. They are NOT total black boxes if we find the right tools/methods to interpret them. Apply the techniques in our future work!

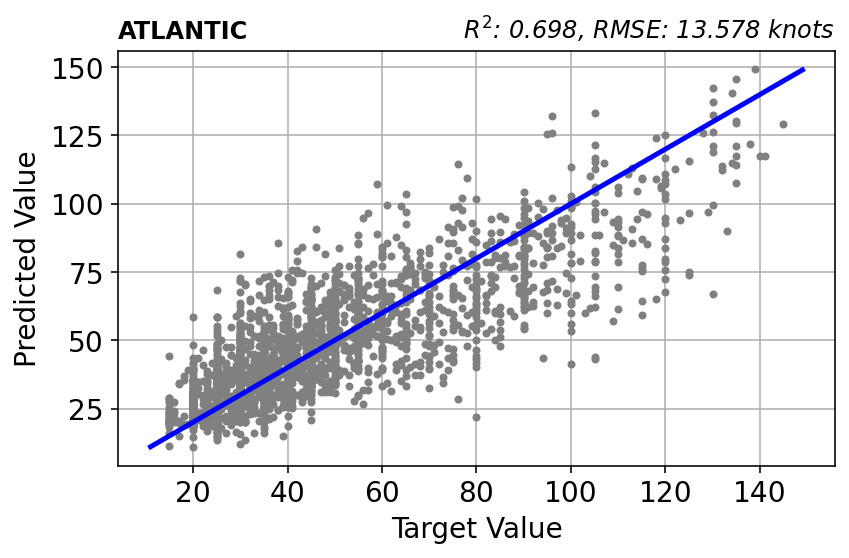

Q. 1: The topic of bias and model error is closely connected to uncertainty – the more we can reduce error and improve our predictions, the less uncertainty there is to deal with (up to a point, as some of the uncertainty is caused by chaotic Earth system dynamics). Because of this connection, we can use uncertainty methods such as quantile regression as a loss function to improve the CRPS score and ultimately improve the model. We also thought Bayesian Neural Net is a promising approach since they represent AI model results as a distribution instead of just point estimates. Although Gaussian distributions are commonly used, the possibility of using Weibull, Pareto, or log-normal distributions may improve model performance under this particular data circumstance and allow uncertainty quantification in the cause of rare events (i.e. high-wind speed events in context to our problem). Additionally, as discussed earlier in the week, PCA is often used for dimensionality reduction. However, using only the first few modes can lead to exclusion of some of the key features when the focus is more on the prediction of high winds. Alternative techniques, like an autoencoder, or variational autoencoder can be more promising for improving the representation of extreme events in the low-dimensional representation of the dataset. These can be combined with or can complement, climate science methods for improving rare event representation, like importance sampling, genealogical sampling algorithms, or clever use of ensembles.

For communicating uncertainty to the end-user, we think this is a question of clever data visualization techniques, as shown in the lectures. Using prediction intervals can be a straightforward way of communicating information about uncertainty to users. Also, careful use of words, whether written or spoken, can help increase understanding, as when we say an event is “very likely” or “somewhat likely” to occur.

Q. 2: Something that stood out to many of us was the Shapley and permutation importance methods from the first day. When working with stakeholders who trust weather/climate models over any AI method, it is critical to have an explanation for what caused the model result, as trust in machine learning can be low. Additionally, climate dynamicists who are used to thinking about theories and the physical constraints of the Earth system will want to understand the features that contributed to the AI model results, and ideally tie them back to the dynamics of the system. On the first day, we did PCA for dimensionality reduction, which was helpful for model stability, but removed physical features from the model inputs. It would be helpful to have methods for dimensionality reduction that keeps physicality present, so instead of talking about the importance of PC mode 3 to the end result, we can talk about a variable like temperature or cloud fraction.